In this article, I explore the capability to generate 3D assets based on 2D reference images. The control over the final results is crucial, particularly when the need arises for exact assets, such as a building with a particular architecture or unique adornments.

For this experiment, I went with architecture. The versatility of buildings allows for a wide range of designs while adhering to a consistent style. The goal was to determine whether it’s possible to dictate the style, arrangement of elements, camera angles, and features like the number of stories or the architectural shape of a structure.

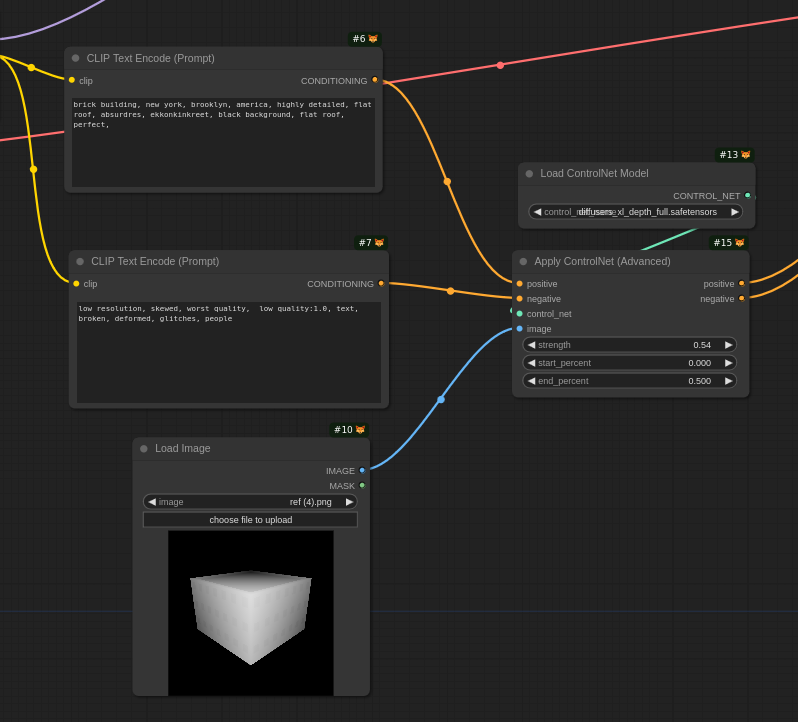

I decided, that it would be a brick building. The process began with assembling a collection of style references in SDXL:

Challenges in 3D object generation and FOV issues

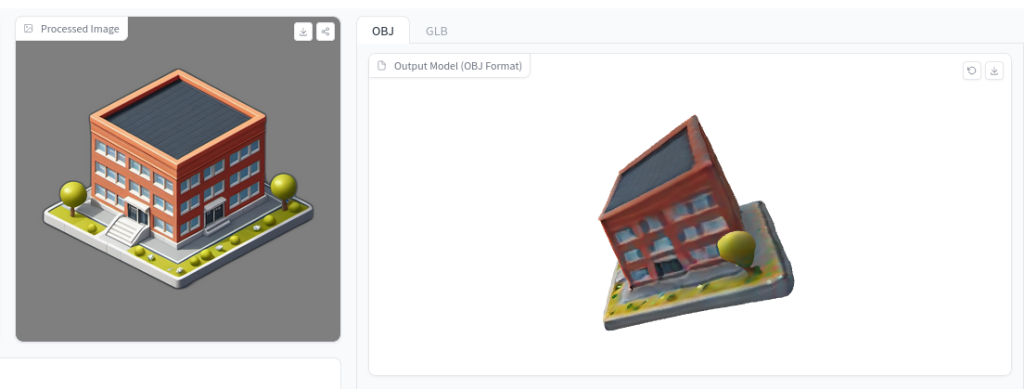

My initial attempts at generating 3D objects were disappointing:

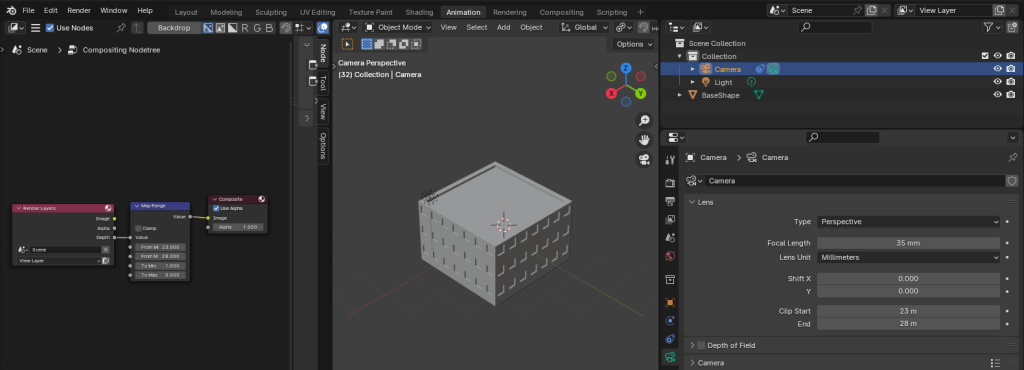

It became apparent that the network struggles with this FOV. After some experimentation, I discovered that 35 mm yields accurate results, which I’ll need to remember moving forward.

Variations in asset generation, camera parameter control, and basic building shape

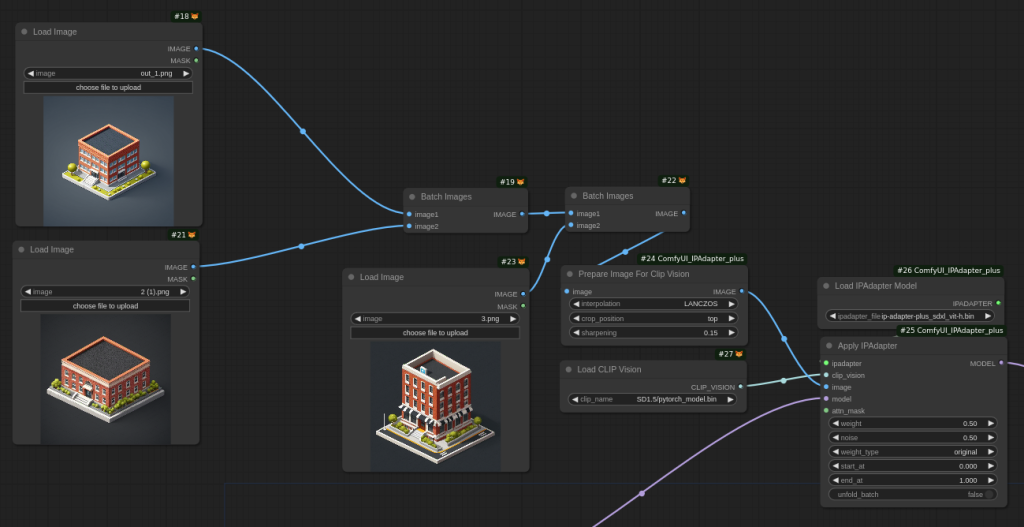

To keep the building’s style consistent, I utilized an IP Adapter. It ensures some output resemblance to the input while allowing flexibility. I hoped it would work well in tandem with a control net to preserve style yet adhere to controller-imposed limitations.

To ensure scene composition and camera FOV—which I’ve learned is crucial—I began with a simple Blender scene. It featured a basic building with windows dictating the number of floors and windows per floor, a camera set to the correct FOV, and an output configured for a depth map via compositor nodes.

In ComfyUI, I employed a Depth controller to fine-tune the results:

In tweaking the weight values, I found that 0.5-0.6 hit the sweet spot. Anything less significantly altered the FOV, while anything more just didn’t add any excitement, putting undue emphasis on matching the image depth. It’s clear that these values aren’t one-size-fits-all and will require adjustments based on individual scenarios.

The outcomes were acceptable:

The aesthetic was on par with my references, yet I maintained control over the final product through camera settings, scene composition, and the building’s features like floors and windows, all aligned with my depth map.

I played around a bit by removing some windows and throwing in an extra floor.

Then, I decided to up the ante with a more intricate design:

The end results were a testament to the accuracy of the shape I envisioned

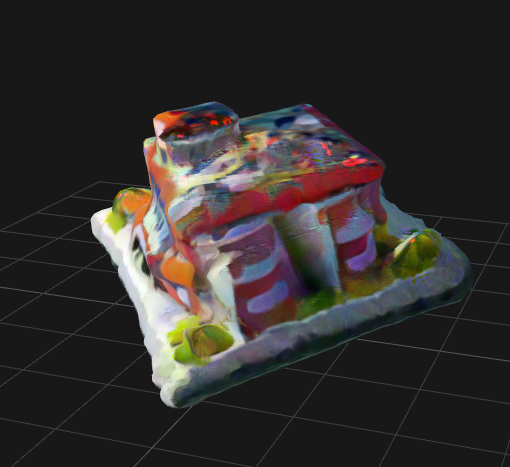

When it came to mesh generation from these images, TripoRS, again, was quick but generated low-quality outputs. Despite that, the building’s form was mostly correct and didn’t suffer the distortion seen in my initial isometric camera experiments:

The asset’s rear side, which wasn’t captured in the original image, didn’t fare as well:

In pursuit of higher-quality assets, I turned to Three Studio and Stable Zero123, following the methodology outlined in my earlier post:

The quality is not much better, it looks like the FOV is back. I did not find any information on best FOV for Stable Zero123, but In the Zero123 paper value of 49.1 is mentioned.

The results are a bit better, but still far from perfect. To compare them with commercial solution I also tried Meshy.ai, but it failed to generate a nice result as well:

The pipeline of generating variations of images worked, there exists fine control over the output. But this image gave particularly bad results and the 3d objects are terrible. I should test this pipeline on something less challenging for 2D -> 3D part of the process.

Last hope: SV3D: Novel Multi-view Synthesis and 3D Generation from a Single Image using Latent Video Diffusion

Stability AI just released a new model: SV3D, so I thought, that such a hard case might be a nice way to try it out.

And the results are great!

I’ll explore it in the future. There is still no way to convert the result to 3D object, but possibly good, old photogrammetry will work?